Computer animation: models for facial expression

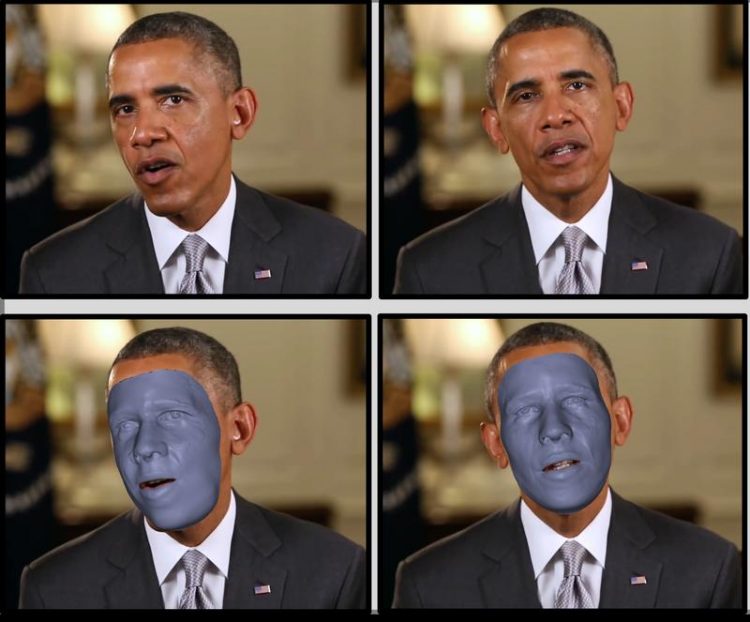

Detailed face rig of Barack Obama MPI

The computer scientists have developed new methods which enable them to reconstruct surfaces from videos and then alter them on the computer.

The scientists from the Max Planck Institute for Informatics and Saarland University will present the new technology at the Cebit Computer Fair in Hannover from 14 to 18 March (Hall 6, Stand D 28).

When Brad Pitt lives his life backwards in the film “The Curious Case of Benjamin Button” and morphs from an old man to a small child, it wasn't just a matter of using a lot of make-up. Every single scene was edited on the computer in order to animate Brad Pitt's face extremely realistically and in a way that was appropriate to his age.

“Sometimes they take several weeks in the big film studios to work on scenes five seconds long in order to reproduce an actor's appearance and the proportions of their face and body in photo-realistic quality. A lot of the touching-up on the computer is still done by hand”, says Christian Theobalt, Leader of the “Graphics, Vision and Video” Group at the Max Planck Institute in Saarbruecken and Professor of Informatics at Saarland University.

Film-makers use the same technology to insert fantasy figures such as zombies, orks or fauns into films and give them sad expressions or magic laugh lines around their eyes.

Together with his research group, Christian Theobalt now wants to significantly speed up the process. “One challenge is that we perceive actors' facial expressions very precisely and we notice immediately if a single blink doesn't look authentic or the mouth doesn't open in time to the words spoken in the scene”, Theobalt explains. To animate a face in complete detail, an exact three-dimensional model of the face is required, referred to as a face rig in the industry jargon. The lighting and reflections of the scene are also incorporated.

The face model can be given different expressions in a mathematical process.

“We can generate this face rig entirely on the basis of recordings made by a single standard video camera. We use mathematical methods to estimate the parameters needed to record all the details of the face rig. They not only include the facial geometry, meaning the shape of the surfaces, but also the reflective characteristics and lighting of the scene”, the computer scientist elaborates. These details were sufficient for their method to faithfully reconstruct an individual face on the computer and, for example, to animate it naturally with laugh lines. “As a model of the face, it works like a complete face rig which we can give various expressions by modifying its parameters”, says Theobalt. The algorithm developed by his team already extracts information on numerous expressions which show different emotions. “This means we can decide at the computer whether the actor or avatar is to look happy or contemplative and we can give them a level of detail in their facial expression which wasn't there when the scene was shot”, says the researcher from Saarbruecken.

To date, special effects companies working in the film industry have expended a great deal of effort to achieve the same result. “Today the proportions of a face are reconstructed with the aid of scanners and multi-camera systems. To this end, you often need complicated specially controlled lighting setups”, as Pablo Garrido, one of Christian Theobalt's PhD students at Saarland University explains. Precisely such a system was recently set up in the White House to produce a 3D model for a bust of Barack Obama. This could have been accomplished far more easily with the Saarbruecken technology.

“With previous methods, you also needed precisely choreographed facial movements, in other words shots of the particular actor showing pleasure, anger or annoyance in their faces, for example”, Garrido explains. The researchers from Saarbruecken recently themselves demonstrated how 3D face models can be generated with a video or depth camera, also in real time. However, these other models are nothing like as detailed as the ones produced by this new method. “We can work with any output from a normal video camera. Even an old recording where you can see a conversation, for example, is enough for us to model the face precisely and animate it”, the computer scientist states. You can even use the reconstructed model to fit the movements of an actor's mouth in a dubbed film to the new words spoken.

Technology is improving communication with and through avatars

The technique is not only of interest to the film industry but can also help to give avatars in the virtual world, your personal assistant on the net or virtual interlocutors in future telepresence applications, a realistic, personal face. “Our technology can ensure that people feel more at ease when communicating with and through avatars”, says Theobalt. To achieve this photo-realistic facial reconstruction, the researcher and his team had to solve demanding scientific problems at the intersection of computer graphics and computer vision. The underlying methods for measuring deformable surfaces from monocular video can also be used in other areas, for example in robotics, autonomous systems or measurements in mechanical engineering.

Pablo Garrido and Christian Theobalt, together with their co-authors Michael Zollhoefer, Dan Casas, Levi Valgaerts, Kiran Varanasi and Patrick Perez will present the results of their research in the most important specialist publication for computer graphics (ACM Transactions on Graphics) and at Siggraph 2016. Between 14 and 18 March, the scientists will showcase the technology at CeBIT in Hannover on the Saarland stand (Hall 6, Stand D 28). Theobalt's working group has also spawned the start-up company The Captury. This company has developed a technique for real-time marker-less full-body motion capture from multi-view video of general scenes. It thus gets rid of the special marker suits needed in previous motion capture systems. This technology is being used in computer animation but also in medicine, ergonomic research, sports science and in the factory of the future where the interacting movements of industrial workers and robots have to be recorded. For this technology, The Captury won one of the main prizes in the start-up competition, IKT Innovativ, at CeBIT 2013.

Further information

Website of the working group: http://gvv.mpi-inf.mpg.de/projects/PersonalizedFaceRig/

Press contact:

Bertram Somieski

Max Planck Institute for Informatics

Max Planck Institute for Software Systems

Public Relations

Tel +49/681/9325-5710 – somieski@mpi-inf.mpg.de

Media Contact

More Information:

http://www.uni-saarland.deAll latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

A ‘language’ for ML models to predict nanopore properties

A large number of 2D materials like graphene can have nanopores – small holes formed by missing atoms through which foreign substances can pass. The properties of these nanopores dictate many…

Clinically validated, wearable ultrasound patch

… for continuous blood pressure monitoring. A team of researchers at the University of California San Diego has developed a new and improved wearable ultrasound patch for continuous and noninvasive…

A new puzzle piece for string theory research

Dr. Ksenia Fedosova from the Cluster of Excellence Mathematics Münster, along with an international research team, has proven a conjecture in string theory that physicists had proposed regarding certain equations….