Holographic displays

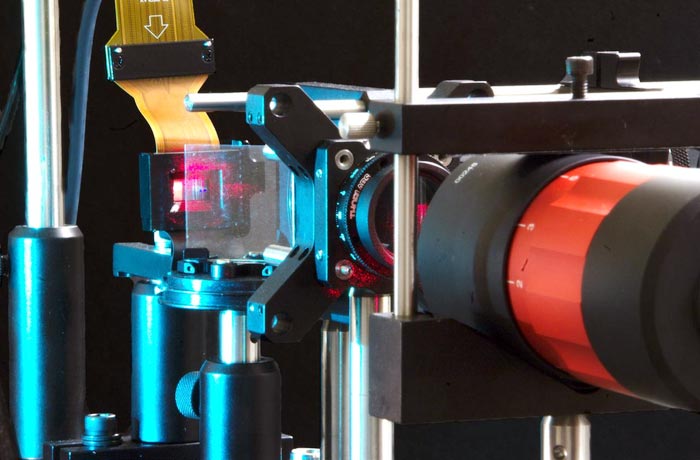

Photograph of a holographic display prototype.

Credit: Stanford Computational Imaging Lab

Virtual and augmented reality headsets are designed to place wearers directly into other environments, worlds and experiences. While the technology is already popular among consumers for its immersive quality, there could be a future where the holographic displays look even more like real life. In their own pursuit of these better displays, the Stanford Computational Imaging Lab has combined their expertise in optics and artificial intelligence. Their most recent advances in this area are detailed in a paper published Nov. 12 in Science Advances and work that will be presented at SIGGRAPH ASIA 2021 in December.

At its core, this research confronts the fact that current augmented and virtual reality displays only show 2D images to each of the viewer’s eyes, instead of 3D – or holographic – images like we see in the real world.

“They are not perceptually realistic,” explained Gordon Wetzstein, associate professor of electrical engineering and leader of the Stanford Computational Imaging Lab. Wetzstein and his colleagues are working to come up with solutions to bridge this gap between simulation and reality while creating displays that are more visually appealing and easier on the eyes.

The research published in Science Advances details a technique for reducing a speckling distortion often seen in regular laser-based holographic displays, while the SIGGRAPH Asia paper proposes a technique to more realistically represent the physics that would apply to the 3D scene if it existed in the real world.

Bridging simulation and reality

In the past decades, image quality for existing holographic displays has been limited. As Wetzstein explains it, researchers have been faced with the challenge of getting a holographic display to look as good as an LCD display.

One problem is that it is difficult to control the shape of light waves at the resolution of a hologram. The other major challenge hindering the creation of high-quality holographic displays is overcoming the gap between what is going on in the simulation versus what the same scene would look like in a real environment.

Previously, scientists have attempted to create algorithms to address both of these problems. Wetzstein and his colleagues also developed algorithms but did so using neural networks, a form of artificial intelligence that attempts to mimic the way the human brain learns information. They call this “neural holography.”

“Artificial intelligence has revolutionized pretty much all aspects of engineering and beyond,” said Wetzstein. “But in this specific area of holographic displays or computer-generated holography, people have only just started to explore AI techniques.”

Yifan Peng, a postdoctoral research fellow in the Stanford Computational Imaging Lab, is using his interdisciplinary background in both optics and computer science to help design the optical engine to go into the holographic displays.

“Only recently, with the emerging machine intelligence innovations, have we had access to the powerful tools and capabilities to make use of the advances in computer technology,” said Peng, who is co-lead author of the Science Advances paper and a co-author of the SIGGRAPH paper.

The neural holographic display that these researchers have created involved training a neural network to mimic the real-world physics of what was happening in the display and achieved real-time images. They then paired this with a “camera-in-the-loop” calibration strategy that provides near-instantaneous feedback to inform adjustments and improvements. By creating an algorithm and calibration technique, which run in real time with the image seen, the researchers were able to create more realistic-looking visuals with better color, contrast and clarity.

The new SIGGRAPH Asia paper highlights the lab’s first application of their neural holography system to 3D scenes. This system produces high-quality, realistic representation of scenes that contain visual depth, even when parts of the scenes are intentionally depicted as far away or out-of-focus.

The Science Advances work uses the same camera-in-the-loop optimization strategy, paired with an artificial intelligence-inspired algorithm, to provide an improved system for holographic displays that use partially coherent light sources – LEDs and SLEDs. These light sources are attractive for their cost, size and energy requirements and they also have the potential to avoid the speckled appearance of images produced by systems that rely on coherent light sources, like lasers. But the same characteristics that help partially coherent source systems avoid speckling tend to result in blurred images with a lack of contrast. By building an algorithm specific to the physics of partially coherent light sources, the researchers have produced the first high-quality and speckle-free holographic 2D and 3D images using LEDs and SLEDs.

Transformative potential

Wetzstein and Peng believe this coupling of emerging artificial intelligence techniques along with virtual and augmented reality will become increasingly ubiquitous in a number of industries in the coming years.

“I’m a big believer in the future of wearable computing systems and AR and VR in general, I think they’re going to have a transformative impact on people’s lives,” said Wetzstein. It might not be for the next few years, he said, but Wetzstein believes that augmented reality is the “big future.”

Though augmented virtual reality is primarily associated with gaming right now, it and augmented reality have potential use in a variety of fields, including medicine. Medical students can use augmented reality for training as well as for overlaying medical data from CT scans and MRIs directly onto the patients.

“These types of technologies are already in use for thousands of surgeries, per year,” said Wetzstein. “We envision that head-worn displays that are smaller, lighter weight and just more visually comfortable are a big part of the future of surgery planning.”

“It is very exciting to see how the computation can improve the display quality with the same hardware setup,” said Jonghyun Kim, a visiting scholar from Nvidia and co-author of both papers. “Better computation can make a better display, which can be a game changer for the display industry.”

Stanford graduate student is co-lead author of both papers Suyeon Choi and Stanford graduate student Manu Gopakumar is co-lead author of the SIGGRAPH paper. This work was funded by Ford, Sony, Intel, the National Science Foundation, the Army Research Office, a Kwanjeong Scholarship, a Korea Government Scholarship and a Stanford Graduate Fellowship.

Journal: Science Advances

DOI: 10.1126/sciadv.abg5040

Article Title: Speckle-free holography with partially coherent light sources and camera-in-the-loop calibration

Media Contact

Taylor Kubota

Stanford University

tkubota@stanford.edu

Office: 650-724-7707

Original Source

All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Innovative 3D printed scaffolds offer new hope for bone healing

Researchers at the Institute for Bioengineering of Catalonia have developed novel 3D printed PLA-CaP scaffolds that promote blood vessel formation, ensuring better healing and regeneration of bone tissue. Bone is…

The surprising role of gut infection in Alzheimer’s disease

ASU- and Banner Alzheimer’s Institute-led study implicates link between a common virus and the disease, which travels from the gut to the brain and may be a target for antiviral…

Molecular gardening: New enzymes discovered for protein modification pruning

How deubiquitinases USP53 and USP54 cleave long polyubiquitin chains and how the former is linked to liver disease in children. Deubiquitinases (DUBs) are enzymes used by cells to trim protein…