Making AI smarter with an artificial, multisensory integrated neuron

A Penn State research team developed a bio-inspired artificial neuron that can process visual and tactile sensory inputs together.

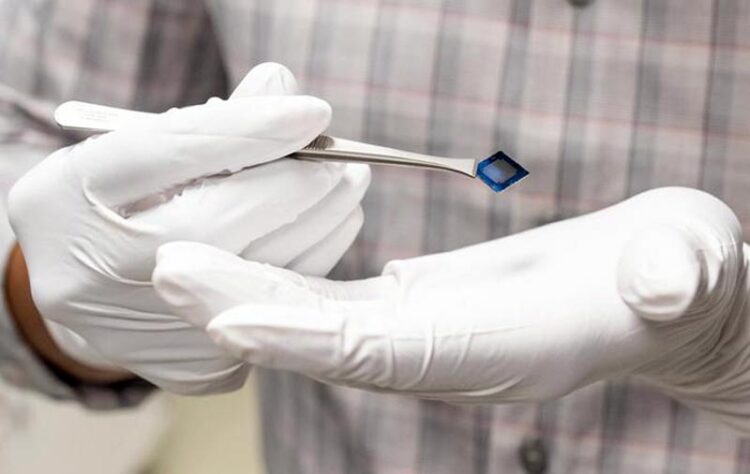

Credit: Tyler Henderson/Penn State

The neuron, developed by Penn State researchers, processes visual and tactile input together.

The feel of a cat’s fur can reveal some information, but seeing the feline provides critical details: is it a housecat or a lion? While the sound of fire crackling may be ambiguous, its scent confirms the burning wood. Our senses synergize to give a comprehensive understanding, particularly when individual signals are subtle. The collective sum of biological inputs can be greater than their individual contributions. Robots tend to follow more straightforward addition, but Penn State researchers have now harnessed the biological concept for application in artificial intelligence (AI) to develop the first artificial, multisensory integrated neuron.

Led by Saptarshi Das, associate professor of engineering science and mechanics at Penn State, the team published their work on September 15 in Nature Communication.

“Robots make decisions based on the environment they are in, but their sensors do not generally talk to each other,” said Das, who also has joint appointments in electrical engineering and in materials science and engineering. “A collective decision can be made through a sensor processing unit, but is that the most efficient or effective method? In the human brain, one sense can influence another and allow the person to better judge a situation.”

For instance, a car might have one sensor scanning for obstacles, while another senses darkness to modulate the intensity of the headlights. Individually, these sensors relay information to a central unit which then instructs the car to brake or adjust the headlights. According to Das, this process consumes more energy. Allowing sensors to communicate directly with each other can be more efficient in terms of energy and speed — particularly when the inputs from both are faint.

“Biology enables small organisms to thrive in environments with limited resources, minimizing energy consumption in the process,” said Das, who is also affiliated with the Materials Research Institute. “The requirements for different sensors are based on the context — in a dark forest, you’d rely more on listening than seeing, but we don’t make decisions based on just one sense. We have a complete sense of our surroundings, and our decision making is based on the integration of what we’re seeing, hearing, touching, smelling, etcetera. The senses evolved together in biology, but separately in AI. In this work, we’re looking to combine sensors and mimic how our brains actually work.”

The team focused on integrating a tactile sensor and a visual sensor so that the output of one sensor modifies the other, with the help of visual memory. According to Muhtasim Ul Karim Sadaf, a third-year doctoral student in engineering science and mechanics, even a short-lived flash of light can significantly enhance the chance of successful movement through a dark room.

“This is because visual memory can subsequently influence and aid the tactile responses for navigation,” Sadaf said. “This would not be possible if our visual and tactile cortex were to respond to their respective unimodal cues alone. We have a photo memory effect, where light shines and we can remember. We incorporated that ability into a device through a transistor that provides the same response.”

The researchers fabricated the multisensory neuron by connecting a tactile sensor to a phototransistor based on a monolayer of molybdenum disulfide, a compound that exhibits unique electrical and optical characteristics useful for detecting light and supporting transistors. The sensor generates electrical spikes in a manner reminiscent of neurons processing information, allowing it to integrate both visual and tactile cues.

It’s the equivalent of seeing an “on” light on the stove and feeling heat coming off of a burner — seeing the light on doesn’t necessarily mean the burner is hot yet, but a hand only needs to feel a nanosecond of heat before the body reacts and pulls the hand away from the potential danger. The input of light and heat triggered signals that induced the hand’s response. In this case, the researchers measured the artificial neuron’s version of this by seeing signaling outputs resulted from visual and tactile input cues.

To simulate touch input, the tactile sensor used triboelectric effect, in which two layers slide against one another to produce electricity, meaning the touch stimuli was encoded into electrical impulses. To simulate visual input, the researchers shined a light into the monolayer molybdenum disulfide photo memtransistor — or a transistor that can remember visual input, like how a person can hold onto the general layout of a room after a quick flash illuminates it.

They found that the sensory response of the neuron — simulated as electrical output — increased when both visual and tactile signals were weak.

“Interestingly, this effect resonates remarkably well with its biological counterpart — a visual memory naturally enhances the sensitivity to tactile stimulus,” said co-first author Najam U Sakib, a third-year doctoral student in engineering science and mechanics. “When cues are weak, you need to combine them to better understand the information, and that’s what we saw in the results.”

Das explained that an artificial multisensory neuron system could enhance sensor technology’s efficiency, paving the way for more eco-friendly AI uses. As a result, robots, drones and self-driving vehicles could navigate their environment more effectively while using less energy.

“The super additive summation of weak visual and tactile cues is the key accomplishment of our research,” said co-author Andrew Pannone, a fourth-year doctoral student in engineering science and mechanics. “For this work, we only looked into two senses. We’re working to identify the proper scenario to incorporate more senses and see what benefits they may offer.”

Harikrishnan Ravichandran, a fourth-year doctoral student in engineering science and mechanics at Penn State, also co-authored this paper.

The Army Research Office and the National Science Foundation supported this work.

Journal: Nature Communications

DOI: 10.1038/s41467-023-40686-z

Article Title: A bio-inspired visuotactile neuron for multisensory integration

Article Publication Date: 15-Sep-2023

Media Contact

Adrienne Berard

Penn State

akb6884@psu.edu

All latest news from the category: Materials Sciences

Materials management deals with the research, development, manufacturing and processing of raw and industrial materials. Key aspects here are biological and medical issues, which play an increasingly important role in this field.

innovations-report offers in-depth articles related to the development and application of materials and the structure and properties of new materials.

Newest articles

New model of neuronal circuit provides insight on eye movement

Working with week-old zebrafish larva, researchers at Weill Cornell Medicine and colleagues decoded how the connections formed by a network of neurons in the brainstem guide the fishes’ gaze. The…

Innovative protocol maps NMDA receptors in Alzheimer’s-Affected brains

Researchers from the Institute for Neurosciences (IN), a joint center of the Miguel Hernández University of Elche (UMH) and the Spanish National Research Council (CSIC), who are also part of…

New insights into sleep

…uncover key mechanisms related to cognitive function. Discovery suggests broad implications for giving brain a boost. While it’s well known that sleep enhances cognitive performance, the underlying neural mechanisms, particularly…