Learning more about particle collisions with machine learning

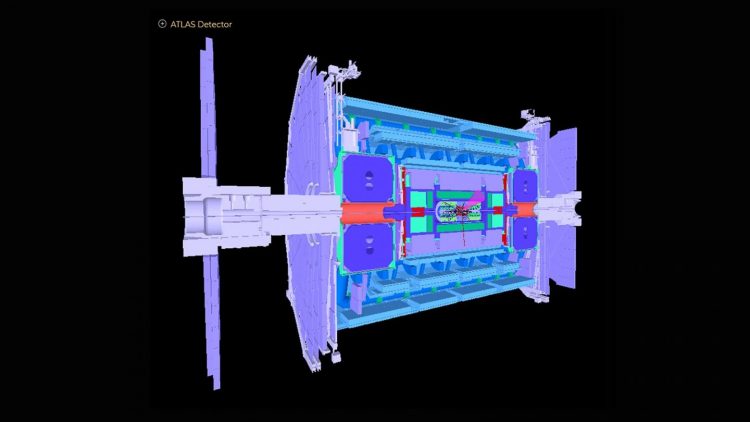

Schematic of ATLAS detector in the Large Hadron Collider. Credit: ATLAS Collaboration

The U.S. Department of Energy's (DOE) Argonne National Laboratory has made many pivotal contributions to the construction and operation of the ATLAS experimental detector at the LHC and to the analysis of signals recorded by the detector that uncover the underlying physics of particle collisions.

Argonne is now playing a lead role in the high-luminosity upgrade of the ATLAS detector for operations that are planned to begin in 2027. To that end, a team of Argonne physicists and computational scientists has devised a machine learning-based algorithm that approximates how the present detector would respond to the greatly increased data expected with the upgrade.

“Most of our research questions at ATLAS involve finding a needle in a giant haystack, where scientists are only interested in finding one event occurring among a billion others.” — Walter Hopkins, assistant physicist in Argonne's High Energy Physics division

As the largest physics machine ever built, the LHC shoots two beams of protons in opposite directions around a 17-mile ring until they approach near the speed of light, smashes them together and analyzes the collision products with gigantic detectors such as ATLAS.

The ATLAS instrument is about the height of a six-story building and weighs approximately 7,000 tons. Today, the LHC continues to study the Higgs boson, as well as address fundamental questions on how and why matter in the universe is the way it is.

“Most of the research questions at ATLAS involve finding a needle in a giant haystack, where scientists are only interested in finding one event occurring among a billion others,” said Walter Hopkins, assistant physicist in Argonne's High Energy Physics (HEP) division.

As part of the LHC upgrade, efforts are now progressing to boost the LHC's luminosity — the number of proton-to-proton interactions per collision of the two proton beams — by a factor of five. This will produce about 10 times more data per year than what is presently acquired by the LHC experiments. How well the detectors respond to this increased event rate still needs to be understood.

This requires running high-performance computer simulations of the detectors to accurately assess known processes resulting from LHC collisions. These large-scale simulations are costly and demand large chunks of computing time on the world's best and most powerful supercomputers.

The Argonne team has created a machine learning algorithm that will be run as a preliminary simulation before any full-scale simulations. This algorithm approximates, in very fast and less costly ways, how the present detector would respond to the greatly increased data expected with the upgrade.

It involves simulation of detector responses to a particle-collision experiment and the reconstruction of objects from the physical processes. These reconstructed objects include jets or sprays of particles, as well as individual particles like electrons and muons.

“The discovery of new physics at the LHC and elsewhere demands ever more complex methods for big data analyses,” said Doug Benjamin, a computational scientist in HEP. “These days that usually means use of machine learning and other artificial intelligence techniques.”

The previously used analysis methods for initial simulations have not employed machine learning algorithms and are time consuming because they involve manually updating experimental parameters when conditions at the LHC change. Some may also miss important data correlations for a given set of input variables to an experiment.

The Argonne-developed algorithm learns, in real time while a training procedure is applied, the various features that need to be introduced through detailed full simulations, thereby avoiding the need to handcraft experimental parameters. The method can also capture complex interdependencies of variables that have not been possible before.

“With our stripped-down simulation, you can learn the basics at comparatively little computational cost and time, then you can much more efficiently proceed with full simulations at a later date,” said Hopkins. “Our machine learning algorithm also provides users with better discriminating power on where to look for new or rare events in an experiment,” he added.

The team's algorithm could prove invaluable not only for ATLAS, but for the multiple experimental detectors at the LHC, as well as other particle physics experiments now being conducted around the world.

###

This study, titled “Automated detector simulation and reconstruction parametrization using machine learning,” appeared in the Journal of Instrumentation. In addition to Hopkins and Benjamin, Argonne authors include Serge Chekanov, Ying Li and Jeremy Love. This study received funding from the U.S. Department of Energy's Office of High Energy Physics and used the high-performance computing cluster operated by the Laboratory Computing Resource Center at Argonne.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation's first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America's scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy's Office of Science.

The U.S. Department of Energy's Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https:/

Media Contact

All latest news from the category: Physics and Astronomy

This area deals with the fundamental laws and building blocks of nature and how they interact, the properties and the behavior of matter, and research into space and time and their structures.

innovations-report provides in-depth reports and articles on subjects such as astrophysics, laser technologies, nuclear, quantum, particle and solid-state physics, nanotechnologies, planetary research and findings (Mars, Venus) and developments related to the Hubble Telescope.

Newest articles

Pinpointing hydrogen isotopes in titanium hydride nanofilms

Although it is the smallest and lightest atom, hydrogen can have a big impact by infiltrating other materials and affecting their properties, such as superconductivity and metal-insulator-transitions. Now, researchers from…

A new way of entangling light and sound

For a wide variety of emerging quantum technologies, such as secure quantum communications and quantum computing, quantum entanglement is a prerequisite. Scientists at the Max-Planck-Institute for the Science of Light…

Telescope for NASA’s Roman Mission complete, delivered to Goddard

NASA’s Nancy Grace Roman Space Telescope is one giant step closer to unlocking the mysteries of the universe. The mission has now received its final major delivery: the Optical Telescope…