A robot learns to imagine itself

A robot can learn full-body morphology via visual self-modeling to adapt to multiple motion planning and control tasks.

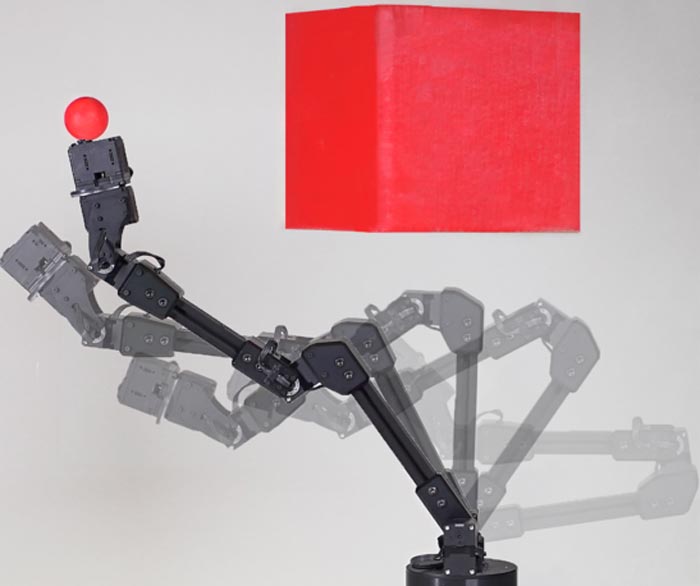

Credit: Jane Nisselson and Yinuo Qin/ Columbia Engineering

Columbia Engineers build a robot that learns to understand itself, rather than the world around it.

As every athletic or fashion-conscious person knows, our body image is not always accurate or realistic, but it’s an important piece of information that determines how we function in the world. When you get dressed or play ball, your brain is constantly planning ahead so that you can move your body without bumping, tripping, or falling over.

We humans acquire our body-model as infants, and robots are following suit. A Columbia Engineering team announced today they have created a robot that–for the first time–is able to learn a model of its entire body from scratch, without any human assistance. In a new study published by Science Robotics,, the researchers demonstrate how their robot created a kinematic model of itself, and then used its self-model to plan motion, reach goals, and avoid obstacles in a variety of situations. It even automatically recognized and then compensated for damage to its body.

Robot watches itself like an an infant exploring itself in a hall of mirrors

The researchers placed a robotic arm inside a circle of five streaming video cameras. The robot watched itself through the cameras as it undulated freely. Like an infant exploring itself for the first time in a hall of mirrors, the robot wiggled and contorted to learn how exactly its body moved in response to various motor commands. After about three hours, the robot stopped. Its internal deep neural network had finished learning the relationship between the robot’s motor actions and the volume it occupied in its environment.

“We were really curious to see how the robot imagined itself,” said Hod Lipson, professor of mechanical engineering and director of Columbia’s Creative Machines Lab, where the work was done. “But you can’t just peek into a neural network, it’s a black box.” After the researchers struggled with various visualization techniques, the self-image gradually emerged. “It was a sort of gently flickering cloud that appeared to engulf the robot’s three-dimensional body,” said Lipson. “As the robot moved, the flickering cloud gently followed it.” The robot’s self-model was accurate to about 1% of its workspace.

Self-modeling robots will lead to more self-reliant autonomous systems

The ability of robots to model themselves without being assisted by engineers is important for many reasons: Not only does it save labor, but it also allows the robot to keep up with its own wear-and-tear, and even detect and compensate for damage. The authors argue that this ability is important as we need autonomous systems to be more self-reliant. A factory robot, for instance, could detect that something isn’t moving right, and compensate or call for assistance.

“We humans clearly have a notion of self,” explained the study’s first author Boyuan Chen, who led the work and is now an assistant professor at Duke University. “Close your eyes and try to imagine how your own body would move if you were to take some action, such as stretch your arms forward or take a step backward. Somewhere inside our brain we have a notion of self, a self-model that informs us what volume of our immediate surroundings we occupy, and how that volume changes as we move.”

Self-awareness in robots

The work is part of Lipson’s decades-long quest to find ways to grant robots some form of self-awareness. “Self-modeling is a primitive form of self-awareness,” he explained. “If a robot, animal, or human, has an accurate self-model, it can function better in the world, it can make better decisions, and it has an evolutionary advantage.”

The researchers are aware of the limits, risks, and controversies surrounding granting machines greater autonomy through self-awareness. Lipson is quick to admit that the kind of self-awareness demonstrated in this study is, as he noted, “trivial compared to that of humans, but you have to start somewhere. We have to go slowly and carefully, so we can reap the benefits while minimizing the risks.”

About the Study

The paper is titled “Full-Body Visual Self-Modeling of Robot Morphologies.”

Authors of the paper are: Boyuan Chen, Robert Kwiatkowski, Carl Vondrick, Hod Lipson

The study was supported by DARPA MTO Lifelong Learning Machines (L2M) Program W911NF-21-2-0071, NSF NRI Award 1925157, NSF AI Institute for Dynamical Systems 2112085, NSF CAREER Award 2046910, and gifts from Facebook Research and Northrop Grumman.

The authors declare no financial or other conflicts of interest.

LINKS:

VIDEO1: https://youtu.be/iSYn9ienWF0 (Technical Summary of this work)

VIDEO2: https://youtu.be/3jbBEMfZTSg (Overview Video on Lab’s work on Robot Sentience)

PROJECT WEBSITE: https://www.creativemachineslab.com/visual-self-modeling.html

Journal: Science Robotics

DOI: 10.1126/scirobotics.abn1944

Article Title: Full-Body Visual Self-Modeling of Robot Morphologies

Article Publication Date: 13-Jul-2022

COI Statement: The authors declare no financial or other conflicts of interest.

Media Contact

Holly Evarts

Columbia University School of Engineering and Applied Science

he2181@columbia.edu

Office: 212-854-3206

Cell: 347-453-7408

Media Contact

All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

First-of-its-kind study uses remote sensing to monitor plastic debris in rivers and lakes

Remote sensing creates a cost-effective solution to monitoring plastic pollution. A first-of-its-kind study from researchers at the University of Minnesota Twin Cities shows how remote sensing can help monitor and…

Laser-based artificial neuron mimics nerve cell functions at lightning speed

With a processing speed a billion times faster than nature, chip-based laser neuron could help advance AI tasks such as pattern recognition and sequence prediction. Researchers have developed a laser-based…

Optimising the processing of plastic waste

Just one look in the yellow bin reveals a colourful jumble of different types of plastic. However, the purer and more uniform plastic waste is, the easier it is to…