Efficient Processing of Big Data on a Daily Routine Basis

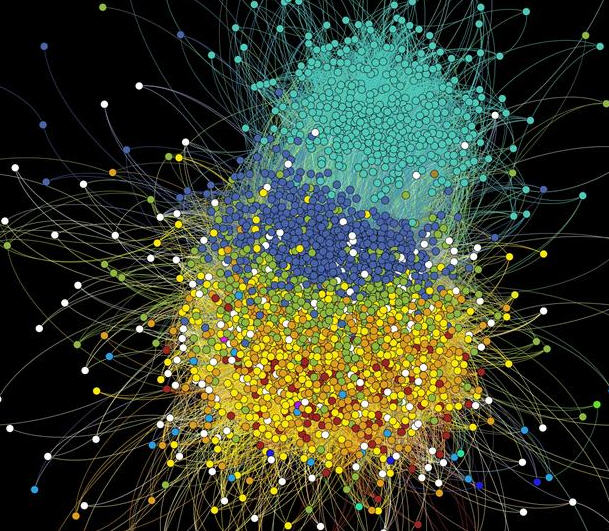

By means of an algorithm, increasing networking of students on Facebook can be displayed according to their age. (Graphics: Michael Hamann, KIT)

These computer systems supply rapidly growing data volumes. Computer science now faces the challenge of processing these huge amounts of data (big data) in a reasonable and secure manner. The new priority program “Algorithms for Big Data” (SPP 1736) funded by the German Research Foundation (DFG) is aimed at developing more efficient computing operations. The Institute of Theoretical Informatics of the Karlsruhe Institute of Technology (KIT) is involved in four of 15 partial projects of this SPP.

As a result of new mobile technologies, such as smartphones or tablet PCs, use of computer systems increased rapidly in the past years. These systems produce increasing amounts of data of variable structure. However, adequate programs for processing these data are lacking. “The algorithms known so far are not designed for processing the huge data volumes associated with many problems. The new priority program is aimed at developing theoretically sound methods that can be applied in practice,” explains Assistant Professor Henning Meyerhenke, KIT.

So far, research relating to big data has focused on scientific applications, such as computer-supported simulations for weather forecasts. Now, KIT researchers are working on solutions to enhance the efficiency of computing processes, which can be applied on a daily routine basis. Examples are search queries on the internet or the structural analysis of social networks.

Four KIT research groups participate in the priority program.

The priority program “Algorithms for Big Data” that is funded by the DFG for a period of six years covers several projects all over Germany. Among these projects are four of the KIT Institute of Theoretical Informatics.

The project “Rapid Inexact Combinatorial and Algebraic Solvers for Large Networks” of Assistant Professor Henning Meyerhenke addresses complex problems encountered in large networks. The tasks to be solved are motivated by biological applications. For example, individuals of a species can be networked according to the similarity of their genome and then classified. The new processes help classify the data arising with a reduced calculation expenditure. In this way, it is easier for biologists to derive new findings.

In the project “Scalable Cryptography” of Assistant Professor Dennis Hofheinz (KIT) and Professor Eike Klitz (Ruhr-Universität Bochum), work focuses on the security of big data. Cryptographic methods, such as encoding or digital signatures, guarantee security also in case of big data volumes. However, existing methods are difficult to adapt to the new tasks. The security provided by the RSA-OAEP encoding method used in conventional internet browsers, for instance, is insufficient in case of big data. “We are looking for a solution that stably guarantees security even in case of an increasing number of accesses and users,” says Assistant Professor Dennis Hofheinz, who is member of the Cryptography and Security Working Group at the KIT.

The increasingly growing social networks, such as Facebook or Twitter, produce large data accumulations. At the same time, these data are of high economic and political value. The project “Clustering in Social Online Networks” of Professor Dorothea Wagner (KIT) and Professor Ulrik Brandes (Universität Konstanz) starts at this point. With the help of new algorithms, the development of online communities in social networks shall be reproduced.

To search a big volume of data e.g. on the internet, a functioning tool, such as a good search machine, is indispensable. “The search machines used today can be further improved by algorithms of increased efficiency,” Professor Peter Sanders says, who also conducts research at the Institute of Theoretical Informatics. Within the framework of his project “Text Indexing for Big Data”, Sanders, together with Professor Johannes Fischer of the Technical University of Dortmund, is looking for optimization options. In particular, they plan to use many processors at the same time, while searching of data in strongly compressed form shall remain possible.

Big Data at the KIT

The topic of Big Data is of high relevance in various application scenarios. Not only science, but also users of new technologies are increasingly facing so far unknown problems. To manage these problems, the KIT works on various Big Data projects apart from SPP 1736. For instance, KIT is partner of the Helmholtz project “Large Scale Data Management and Analysis” (LSDMA). This project pools various competences in handling big data, as it covers effective acquisition, storage, distribution, analysis, visualization, and archiving of data.

In addition, the KIT has been operating the Smart Data Innovation Lab (SDIL), a platform for Big Data research, since 2014. The SDIL reaches highest performance and can be used in practice by industry and science.

Media Contact

All latest news from the category: Information Technology

Here you can find a summary of innovations in the fields of information and data processing and up-to-date developments on IT equipment and hardware.

This area covers topics such as IT services, IT architectures, IT management and telecommunications.

Newest articles

Scientists transform blood into regenerative materials

… paving the way for personalized, blood-based, 3D-printed implants. Scientists have created a new ‘biocooperative’ material based on blood, which has shown to successfully repair bones, paving the way for…

A new experimental infection model in flies

…offers a fast and cost-effective way to test drugs. Researchers at the Germans Trias i Pujol Research Institute and Hospital have reinforced their leading role in infectious disease research by…

Material developed with novel stretching properties

KIT researchers produce metamaterial with different extension and compression properties than conventional materials. With this material, the working group headed by Professor Martin Wegener at KIT’s Institute of Applied Physics…