Next-generation single-photon source for quantum information science

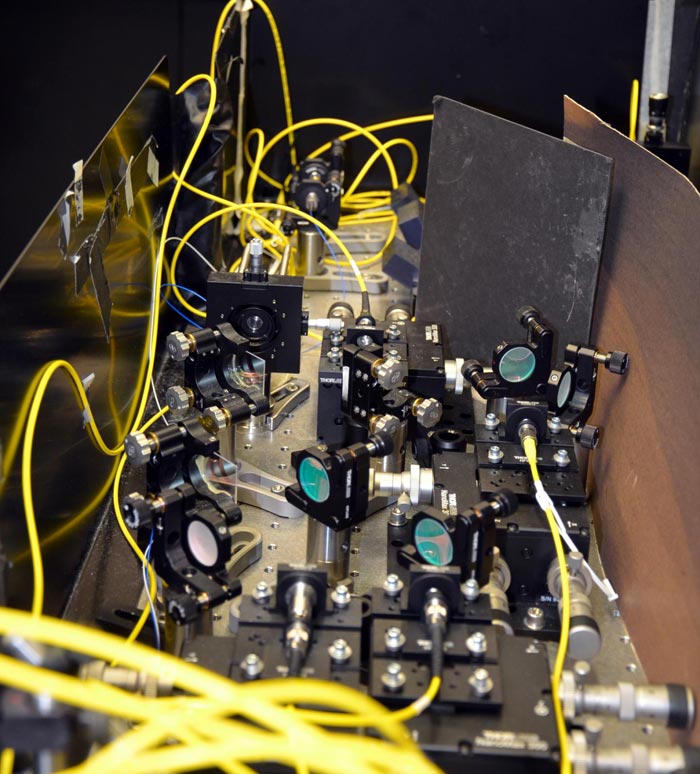

The experimental setup in Kwiat's lab at the Loomis Laboratory of Physics. Credit: Siv Schwink/University of Illinois Department of Physics

Over the last two decades, tremendous advances have been made in the field of quantum information science. Scientists are capitalizing on the strange nature of quantum mechanics to solve difficult problems in computing and communications, as well as in sensing and measuring delicate systems.

One avenue of research in this field is optical quantum information processing, which uses photons–tiny particles of light that have unique quantum properties.

A key resource to advance research in quantum information science would be a source that could efficiently and reliably produce single photons. However, because quantum processes are inherently random, creating a photon source that produces single photons on demand presents a challenge at every step.

Now University of Illinois Physics Professor Paul Kwiat and his former postdoctoral researcher Fumihiro Kaneda (now an assistant professor at Frontier Research Institute for Interdisciplinary Sciences at Tohoku University) have built what Kwiat believes is “the world's most efficient single-photon source.”

And they are still improving it. With planned upgrades, the apparatus could generate upwards of 30 photons at unprecedented efficiencies. Sources of that caliber are precisely what's needed for optical quantum information applications.

The researchers' current findings were published online in Science Advances on October 4, 2019.

Kwiat explains, “A photon is the smallest unit of light: Einstein's introduction of this concept in 1905 marked the dawn of quantum mechanics. Today, the photon is a proposed resource in quantum computation and communication–its unique properties make it an excellent candidate to serve as a quantum bit, or qubit.”

“Photons move quickly–perfect for long-distance transmission of quantum states–and exhibit quantum phenomena at the ordinary temperature of our everyday life,” adds Kaneda. “Other promising candidates for qubits, such as trapped ions and superconducting currents, are only stable in isolated and extremely cold conditions. So the development of on-demand single-photon sources is critical to realizing quantum networks and might enable small room-temperature quantum processors.”

To date, the maximum generation efficiency of useful heralded single photons has been quite low.

Why? Quantum optics researchers often use a nonlinear optical effect called spontaneous parametric down-conversion (SPDC) to produce photon pairs. In a designed crystal, within a laser pulse containing billions of photons, a single high-energy photon can be split into a pair of low-energy photons. It's critical to produce a photon pair: one of the two gets detected–which destroys it–to “herald” the existence of the other, the single-photon output of the photon source.

But making that quantum conversion from one into two photons happen is against all odds.

“SPDC is a quantum process, and it's uncertain whether the source will produce nothing, or one pair, or two pairs,” Kwiat notes. “The probability of producing exactly one pair of single photons is at most 25 percent.”

Physics Professor Fumihiro Kaneda of the Frontier Research Institute for Interdisciplinary Sciences at Tohoku University. Kaneda is a former postdoctoral researcher in the Kwiat group at the Department of Physics, University of Illinois at Urbana-Champaign.

Kwiat and Kaneda solved this low-efficiency problem in SPDC using a technique called time multiplexing. For each run, the SPDC source is pulsed 40 times in equal intervals, producing 40 “time bins,” each possibly containing a pair of photons (although that would rarely be the case). Each time a photon pair is produced, one photon of the pair triggers an optical switch, which routes the sister photon into temporary storage in an optical delay line–a closed loop created with mirrors. By knowing when the photon entered the loop (when the trigger photon was detected), the researchers know exactly how many cycles to hold the photon before they switch it out. In this way, no matter which of the 40 pulses produced the pair, the stored photon can always be released at the same time. Once all 40 pulses have occurred, any stored photons are released together, as though they came from the same time bin.

Kwiat comments, “Mapping a bunch of different possibilities, all the different time bins, to one–it greatly improves the likelihood that you're able to see something.”

Pulsing the source 40 times essentially guarantees that at least one photon pair is produced for each run.

What's more, the delay line that the photons are stored in has a loss rate of only 1.2 percent per cycle; because the source is being pulsed so many times, having a low loss rate is crucial. Otherwise, photons produced in the first few pulses could easily be lost.

When the photons are finally released, they are coupled into a single-mode optical fiber at a high efficiency. This is the state that the photons need to be in to be useful in quantum information applications.

Kwiat points out, the efficiency increase from generating photons in this manner is significant. If, for example, an application called for a 12-photon source, one could line up six independent SPDC sources and wait for an event when each of them simultaneously produced a single pair.

“The world's best competing experiment at the moment using these multiple photon states had to wait something like two minutes until they got a single such event,” Kwiat notes. “They're pulsing at 80-million times a second–they're trying very, very often–but it's only about once every two minutes that they get this event where each source produces exactly one photon pair.

“We can calculate based on our rate the likelihood that we'd be able to produce something like that. We're actually driving quite a bit slower, so we're only making the attempt every 2 microseconds–they're trying it 160 times as often–but because our efficiency is so much higher using multiplexing, we'd actually be able to produce something like 4,000 12-photon events per second.”

In other words, Kwiat and Kaneda's production rate is about 500,000 times faster.

However, as Kwiat notes, a few problems remain to be solved. One issue stems from the random nature of the down-conversion process: there's a chance that instead of a single photon pair, multiple photon pairs could be produced. Furthermore, because the down-conversion process used in this experiment was relatively inefficient, the source was “driven” at a higher rate, increasing the probability that such unwanted multiple pairs would be generated.

Even accounting for potential multi-photon events, the efficiency level of this experiment was a world record.

So what's next, and how will the Kwiat team address these rare unwanted multiphoton events?

Colin Lualdi, a current graduate student working in Kwiat's research group, is working on upgrading the source with photon-number-resolving detectors that would discard multiphoton events before the delay line is triggered to store them. This improvement would eliminate the issue of multiphoton events altogether.

Another area of ongoing research for Kwiat's team will improve the efficiency of individual parts of the single-photon-source apparatus. Lualdi believes future improvements will push the rate of single-photon production far beyond the current experiment.

“The eventual goal is to be able to prepare single pure quantum states that we can use to encode and process information in ways that surpass classical approaches,” Lualdi explains. “That's why it's so imperative that these sources produce single photons. If the source unexpectedly generates two photons instead of one, then we don't have the basic building block that we need.”

And in order to be able to perform any kind of meaningful quantum information processing with these photonic qubits, a large supply is needed.

As Kwiat puts it, “The field is moving beyond experiments with just one or two photons. People are now trying to do experiments on 10 to 12 photons, and eventually we'd like to have 50 to 100 photons.”

Kwiat extrapolates that the improvements being made on this work could pave the way toward the capacity to generate over 30 photons at high efficiencies. Kwiat and Kaneda's results have moved us one step closer to making optical quantum information processing a reality.

Media Contact

All latest news from the category: Physics and Astronomy

This area deals with the fundamental laws and building blocks of nature and how they interact, the properties and the behavior of matter, and research into space and time and their structures.

innovations-report provides in-depth reports and articles on subjects such as astrophysics, laser technologies, nuclear, quantum, particle and solid-state physics, nanotechnologies, planetary research and findings (Mars, Venus) and developments related to the Hubble Telescope.

Newest articles

Can lab-grown neurons exhibit plasticity?

“Neurons that fire together, wire together” describes the neural plasticity seen in human brains, but neurons grown in a dish don’t seem to follow these rules. Neurons that are cultured…

Unlocking the journey of gold through magmatic fluids

By studying sulphur in magmatic fluids at extreme pressures and temperatures, a UNIGE team is revolutionising our understanding of gold transport and ore deposit formation. When one tectonic plate sinks…

3D concrete printing method that captures carbon dioxide

Scientists at Nanyang Technological University, Singapore (NTU Singapore) have developed a 3D concrete printing method that captures carbon, demonstrating a new pathway to reduce the environmental impact of the construction…